AgenticWorkflowMonitoring:BestPractices

2025-09-08

Agentic workflows are automation systems that make decisions independently, combining AI with workflow management. While these systems can handle complex tasks and reduce manual intervention, they require constant monitoring to ensure reliability, accuracy, and alignment with business goals. This is especially important as their autonomous nature can lead to unpredictable behaviours or silent errors.

Key monitoring practices include:

- Metrics to Track: Focus on task adherence, tool call accuracy, intent resolution, coherence, recoverability, latency, and throughput.

- Measurement Methods: Use a mix of automated tools, semi-automated approaches, and manual reviews for thorough oversight.

- Logging and Observability: Implement structured logging, real-time tracing, distributed tracing, and anomaly detection to maintain transparency.

- Error Recovery: Combine automated alerts with human oversight to quickly detect and resolve issues.

- Guardrails: Use validation and monitoring at every stage - pre-input, input, output, and post-output - to minimise risks.

Key Metrics for Monitoring

Agentic workflows require a more nuanced approach to measurement since success isn't a simple yes-or-no outcome. Evaluating independent decision-making can be complex, so the metrics need to assess the quality of decisions, the accuracy of autonomous actions, and how well they align with overall business goals. These metrics form the foundation for understanding and improving agentic workflows.

Core Metrics to Track

One of the most important metrics is task adherence, which measures how closely agents stick to their intended objectives while making independent decisions. Unlike traditional rule-based systems that either follow instructions or fail outright, agentic workflows can deviate from their purpose while still appearing functional. Tracking task adherence helps spot when agents start pursuing unintended goals or optimising for outcomes that don't align with business priorities. A drop in adherence could signal that the agent is learning patterns that seem logical but don't serve its intended purpose.

Another key metric is tool call accuracy, which evaluates how effectively agents use available resources and integrations. This ensures that the tools and systems the agent interacts with are being used as intended. Similarly, intent resolution focuses on whether the agent successfully fulfils the underlying purpose of each workflow, not just completing tasks on the surface.

Coherence tracking assesses the logical consistency of an agent's decisions over time. Ideally, agentic workflows should adapt to new situations while maintaining rational decision-making patterns. This metric flags issues like contradictory choices or erratic behaviour, which are especially concerning in areas like financial transactions or compliance-related tasks.

Recoverability measures how well the system handles unexpected errors and recovers without manual intervention. Unlike traditional systems that might simply crash, agentic workflows are designed to keep operating, even when problems arise. This metric evaluates the system's ability to identify issues, correct them, and return to optimal performance smoothly. It plays a crucial role in minimising disruptions and improving system reliability.

Finally, latency and throughput remain relevant but require a different perspective in agentic workflows. These metrics should account for the extra time needed for decision-making and adaptation. A slightly longer response time might be acceptable if it leads to better results overall.

How to Measure Metrics

With the key metrics defined, the next step is figuring out how to measure them effectively. Monitoring agentic workflows requires a mix of automated tools and human oversight. Fully automated systems might miss subtle issues, while manual evaluation alone isn't practical for continuous monitoring.

Automated measurement is ideal for quantitative metrics like latency, tool call success rates, and task completion. These systems can continuously track performance and generate alerts when metrics fall outside acceptable ranges. Using standard monitoring tools, organisations can start collecting these metrics without needing specialised systems.

Semi-automated approaches combine algorithms with human input to assess metrics that require more context. For instance, natural language processing can evaluate the quality of agent communications, while human reviewers focus on the nuances of intent resolution and coherence. By sampling workflow executions and flagging potentially problematic cases, this method ensures efficiency while still addressing complex issues.

Manual evaluation is indispensable for subjective metrics like business alignment and decision quality. Regular human reviews of agent decisions, customer interactions, and broader outcomes provide insights that automated tools can't capture. Using standardised rubrics helps ensure consistency and reliability in these evaluations. The frequency of manual reviews should depend on the importance of the workflow and how often the system changes. For example, customer-facing workflows might need daily reviews, while internal processes could be evaluated weekly or monthly.

Measurement infrastructure for agentic workflows should log both inputs and outputs, along with the reasoning behind decisions whenever possible. This detailed logging allows for retrospective analysis, helping identify patterns that might not be obvious in real-time.

For organisations developing agentic workflows, it's crucial to build monitoring tools into the system from the outset. Retrofitting monitoring onto an existing system can be challenging, and the autonomous nature of these workflows demands deeper visibility than traditional automation systems can provide.

Logging and Observability Best Practices

When it comes to troubleshooting and fine-tuning agentic workflows, effective logging and observability are absolutely critical. Unlike traditional systems, where failure modes can often be anticipated, agentic workflows operate autonomously, making decisions that demand detailed records to understand what occurred, why it happened, and how to avoid similar issues in the future.

The complexity of agentic workflows is what sets them apart. These systems don't just follow a set of predefined steps - they evaluate, adapt, and make decisions based on context. Without a robust logging and observability framework, it becomes almost impossible to troubleshoot issues or learn from the system's behaviour. Let’s explore the key techniques for implementing effective logging and observability.

Logging Techniques

Structured logging is the backbone of any agentic workflow. Capturing every interaction, decision, and outcome in a consistent format - like JSON - makes the data both organised and machine-readable. JSON, in particular, is ideal for representing the decision-making hierarchy in a structured way.

One of the most important aspects of logging is recording prompts and responses. This means not just documenting the final output but also the reasoning process behind each decision. For example, if an agent selects a specific tool or takes a particular action, logging the context and rationale behind that choice provides valuable insights for debugging and optimisation.

Tracking token consumption is another key practice. By logging token usage alongside the quality of the outputs, you can identify inefficiencies, such as when the system uses more resources than necessary or generates overly complex responses. This helps in balancing performance with cost.

Comprehensive logging of tool usage and state transitions is also essential. Document every tool interaction, including retries, failures, and the reasoning behind tool selection. For example, if an agent chooses one tool over another, understanding why it made that choice can inform future improvements.

Error context logging goes beyond simply noting that an error occurred. It’s important to capture detailed context and state information around the error. This extra layer of detail often proves more useful than the error message itself when diagnosing and resolving issues.

Finally, logging should include performance metadata such as response times, resource usage, and system load during workflow execution. This data is invaluable for identifying bottlenecks and improving overall system performance.

Improving Observability

Strong logging practices lay the groundwork for observability, which takes things a step further by providing real-time insights into how workflows are performing.

Real-time tracing is particularly important for agentic workflows. Unlike batch systems, these workflows often require immediate intervention when issues arise. Real-time tracing allows monitoring teams to identify and address problems as they happen, preventing them from escalating.

To make tracing effective, use correlation IDs to link related activities across different components. For example, when an agent makes a series of decisions or interacts with multiple tools, these IDs help piece together the full sequence of events. This is especially crucial for workflows that span multiple services or involve collaboration between agents.

When workflows interact with external systems, distributed tracing becomes essential. Every API call, database query, or service interaction should be traced and tied back to the original workflow. This level of visibility helps pinpoint external dependencies that might be causing slowdowns or other issues.

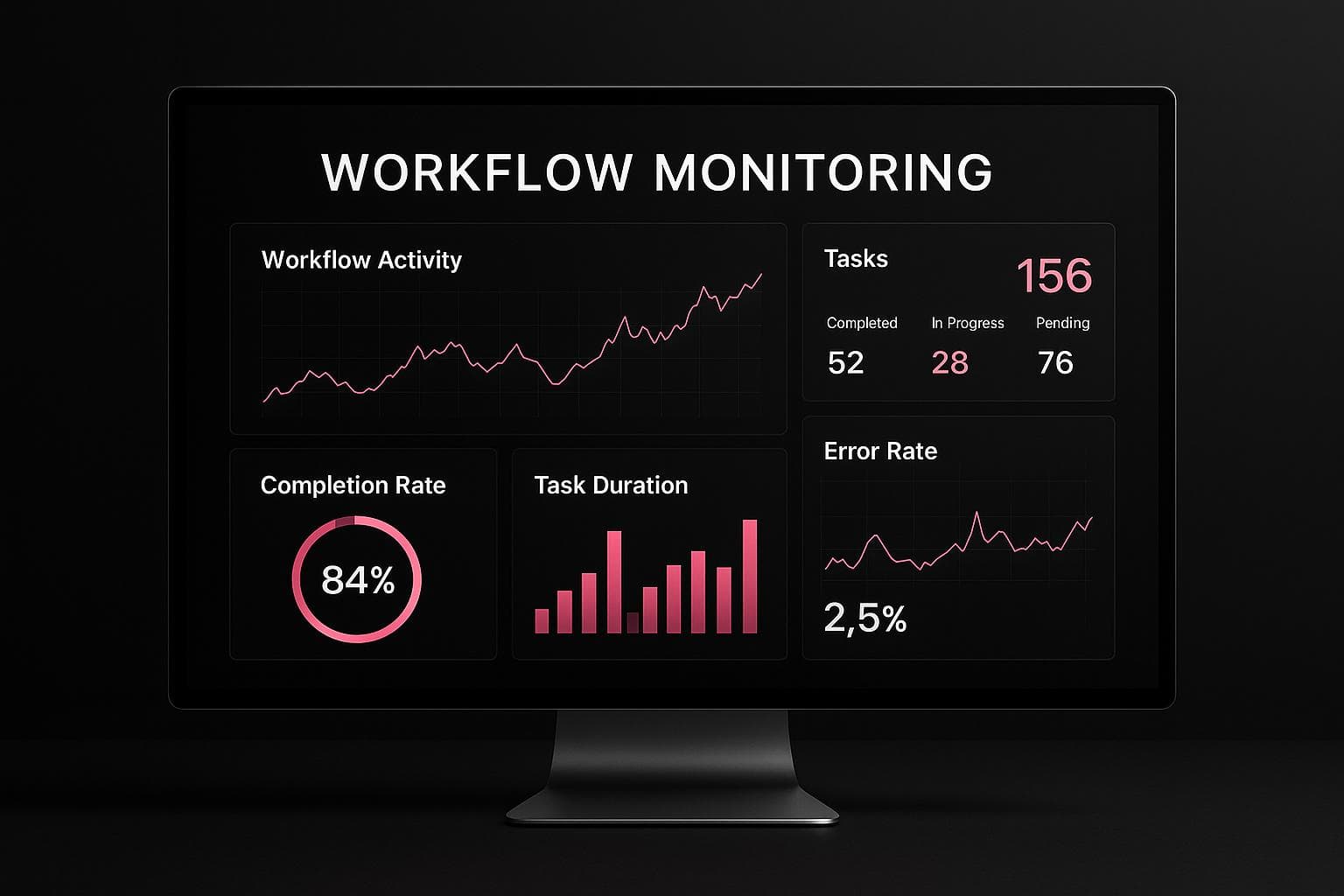

Custom metrics dashboards can provide a clear view of how well the system is functioning. These dashboards might track metrics like decision quality, reasoning patterns, or the success rate of different tool combinations. The goal is to give operators an intuitive understanding of not just what the system is doing, but how effectively it’s solving problems.

Anomaly detection in agentic workflows requires a more nuanced approach than traditional systems. Simple threshold-based alerts often create too much noise because agentic systems naturally exhibit more variability. Instead, machine learning-based anomaly detection can identify subtle deviations from normal behaviour, even if individual metrics seem fine.

For troubleshooting, debug replay capabilities are indispensable. These allow teams to recreate workflow executions for analysis. This is especially useful for agentic workflows, where the same inputs might produce different outputs depending on the system’s state or recent learning. Replay tools help pinpoint issues and test potential fixes.

Performance profiling should focus on the efficiency of the system’s decision-making process, not just its computational performance. By profiling reasoning time and decision complexity, you can identify areas where the system can be streamlined.

Lastly, observability should support A/B testing of different agent configurations or reasoning approaches. This enables teams to experiment with changes while maintaining visibility into how those changes affect performance and decision quality.

Observability for agentic workflows requires a shift in mindset. It’s not just about knowing when something breaks - it’s about understanding how the system thinks, learns, and adapts. This deeper insight enables continuous improvement, helping organisations build systems that are not only resilient but also smarter over time.

Guardrails for Safety

Guardrails refer to the policies, controls, and monitoring systems designed to keep AI agents operating safely, efficiently, and within compliance boundaries. Their purpose is to minimise risks, prevent errors, and avoid unintended outcomes.

These safeguards come into play at four critical stages: pre-input, input, output, and post-output. Think of them as checkpoints that work alongside broader monitoring and error-handling strategies.

- Pre-input validation ensures that incoming data meets quality and safety standards before it even enters the system.

- Input processing takes this validated data and processes it in a way that maintains its context and relevance.

- Output validation checks the system’s responses to ensure they align with predefined rules and expectations.

- Post-output monitoring evaluates the real-world effects of the outputs, providing insights for continuous improvement.

This structured approach is key to embedding reliable control mechanisms into AI workflows, ensuring they remain safe and effective in practice.

sbb-itb-1051aa0

Error Recovery and System Improvement

Error Detection and Recovery

Spotting errors in agent-driven workflows requires a mix of automated tools and human intervention. Subtle declines in AI model performance call for intelligent monitoring systems that can catch issues early and prevent disruptions.

Telemetry data plays a crucial role here. This includes metrics, events, logs, and traces that track errors across infrastructure and application workflows. For AI-specific tasks, indicators like prediction confidence scores, feature drift, and model version performance comparisons are particularly important.

AI-powered anomaly detection systems analyse telemetry data to identify patterns and flag even minor deviations. These systems not only detect issues but also trace their root causes, helping to reduce risks. This is especially critical given that a staggering 85% of AI projects fail due to poor implementation.

To act quickly, contextual alerting mechanisms ensure that relevant information reaches the right team members - whether data scientists or DevOps engineers. Alerts carry supporting telemetry data and runbook guidance to speed up error resolution. Additionally, alert correlation helps reduce the overload of notifications in complex systems, making it easier to focus on critical issues.

While automation is powerful, human oversight remains indispensable. Around 80% of AI projects require human evaluation and feedback. Mechanisms like random reviews of agent actions and decisions ensure operations stay within acceptable limits.

Real-time logging and centralised storage systems capture an agent's actions, inputs, outputs, and errors. This not only supports immediate recovery but also provides valuable data for analysing incidents after they occur.

By combining these detection methods with continuous monitoring, organisations can address errors swiftly and improve their systems over time.

Continuous Monitoring and Updates

Once errors are detected, continuous monitoring ensures they’re addressed promptly. Tools like version control, paired with automated remediation, allow for quick recovery from known issues while keeping a clear audit trail. For instance, if a monitoring system identifies a recurring problem, automated remediation can step in to execute corrective actions, such as rolling back to a previous model version.

Implementing Best Practices with Antler Digital

Once you’ve got a handle on metrics, logging, and observability, the next step is putting those practices into action. Successfully monitoring agentic workflows isn’t just about having the right tools - it’s about combining them with well-planned processes and the right expertise. For businesses navigating this path, working with specialists who understand both the technical and operational sides can make all the difference. A solid starting point? Comprehensive logging, observability, and control systems.

Start by building a strong monitoring foundation. This means setting up reliable logging systems, deploying real-time observability tools, and establishing control planes with proper guardrails. Your monitoring setup should track telemetry data across every stage of your workflow - from the initial agent actions all the way to the final outputs.

Instead of rolling out all monitoring capabilities at once, take a phased approach. Begin with the basics, like tracking core metrics and identifying errors. Then, layer in AI-driven analytics and human oversight. Gradually introduce more advanced features as your team becomes comfortable with the new processes. This way, you can ensure operational stability while adapting to changes.

Antler Digital is a go-to partner for businesses looking to implement scalable agentic workflows and AI integrations. They’ve worked with SMEs across industries like FinTech, SaaS, and environmental platforms, offering a phased, project-based delivery model that ensures a smooth transition from design to monitoring. Their expertise in full-service technical management means they can handle everything - from designing your system to implementing and maintaining monitoring solutions. This ensures your workflows stay efficient and reliable.

By focusing on project-based solutions, Antler Digital allows businesses to integrate monitoring best practices without the need for costly in-house teams. They don’t just retrofit systems after the fact; instead, they build monitoring infrastructure from the ground up, tailored to your needs.

When implementing these practices, it’s essential to consider the specific needs of your industry. For example, a FinTech company monitoring financial transactions will have very different compliance and performance requirements compared to an environmental platform tracking carbon offset data. Tailoring your approach to these unique demands is critical.

Another key factor is budgeting for the ongoing costs of monitoring. This includes expenses like tool subscriptions, log data storage, and infrastructure maintenance. Many businesses overlook these operational costs, which can affect the long-term viability of their monitoring systems.

Finally, make monitoring an integral part of your workflow design from the outset. This ensures continuous improvement and keeps your systems performing at their best.

FAQs

How do monitoring requirements differ between traditional workflows and agentic workflows?

Traditional workflows stick to set processes and often depend on manual oversight to keep things on track - ensuring compliance, managing costs, and maintaining uniformity. These systems tend to be rigid, offering little room for variation in outcomes.

On the other hand, agentic workflows, powered by AI agents, bring a whole new level of flexibility. They rely on real-time decision-making and can carry out tasks autonomously. While this adds more complexity and variability, it also demands close attention to AI performance metrics, adaptability, and decision accuracy. Moving from static systems to these dynamic ones shifts the focus of monitoring to assessing the intelligence and overall effectiveness of these workflows.

What’s the best way for organisations to balance automated and manual oversight when monitoring agentic workflows?

To strike the right balance between automated systems and manual oversight in agentic workflow monitoring, organisations should leverage real-time monitoring tools. These tools are designed to quickly identify anomalies and performance issues, ensuring smooth operations without the need for constant human intervention.

However, human oversight remains crucial for verifying flagged issues, making important decisions, and managing complex situations that require judgement and experience. Establishing clear escalation protocols ensures that automated alerts are promptly assessed by the appropriate team members. This combination of automation and human input strengthens clarity, accountability, and operational stability, all while adhering to UK compliance standards and industry best practices.

By integrating automation with human expertise, businesses can keep their systems running efficiently while minimising risks and maintaining strong operational performance.

How do guardrails ensure safety and compliance in agentic workflows, and what are the best practices for implementing them?

Guardrails play a crucial role in agentic workflows, serving as safeguards to ensure safety, compliance, and ethical practices. They establish boundaries that guide AI behaviour, reduce risks, and prevent unintended consequences, all while staying aligned with legal requirements and organisational policies.

For effective implementation, prioritise adherence to policies, real-time risk monitoring, and ongoing feedback loops. Keeping detailed records of decisions is equally important, as it enhances transparency and supports audits. These steps not only protect the system from errors or misuse but also help maintain its reliability and efficiency in operation.

Lets grow your business together

At Antler Digital, we believe that collaboration and communication are the keys to a successful partnership. Our small, dedicated team is passionate about designing and building web applications that exceed our clients' expectations. We take pride in our ability to create modern, scalable solutions that help businesses of all sizes achieve their digital goals.

If you're looking for a partner who will work closely with you to develop a customized web application that meets your unique needs, look no further. From handling the project directly, to fitting in with an existing team, we're here to help.